Hodge duality fundamentals#

The Hodge dual is in my opinion often presented with unnecessary complexity, frequently involving from the start a number of dimensions beyond three, varying metric signatures, and a formal mathematical approach. However, the concept is intuitive in the familiar 3-dimensional Euclidean space that we experience daily. From there, generalizing the concept to 4-dimensional Minkowski space is natural.

The first part of this article presents the core intuition behind Hodge duality in 3–dimensional Euclidean space and is meant to be easy to follow. Next comes a shaping operation: Preparing the generalization to any number of dimensions and metric signatures. I lay out the relation between the exterior product, matrix determinant, surface, volume and hypervolume. This will permit to generalize the inner product to k–vectors. Finally, I systematically calculate of the Hodge duals of vectors, bivectors, trivectors and quadvectors in Minkowski spacetime with metric signature \((+,-,-,-)\).

For a straightforward method using the interior product \(⌟\) to calculate the Hodge dual of k–forms, have a look at the page Hodge dual computations. This article also provides an easier method to calculate the inner product between k–forms.

This discussion assumes you have a solid understanding the exterior product and Élie Cartan’s differential forms.

Notations in this page are standard and should be widely recognized. See that basis vectors \(\mathbf{e}_μ\) are noted with the partial derivative symbol \(∂_μ\):

I don’t necessarily expect all readers to have ever considered partial derivatives as basis vectors. For our purpose, this is simply a matter of a notation. I use for the inner product either the dot notation \(\cdot\) or the bra-ket notation from quantum mechanics \(\braket{|}\) when it helps readability.

I point out the work of Michael Penn on Differential Forms . In particular, the following videos intersect greatly with the content of this page:

Differential Forms | The Hodge operator via an inner product.

Differential Forms | The Minkowski metric and the Hodge operator.

These videos provide an alternative, yet equivalent, approach to the conclusions presented here. There is also the added bonus that he uses the same metric signature \((+,-,-,-)\).

Duality in three dimensions#

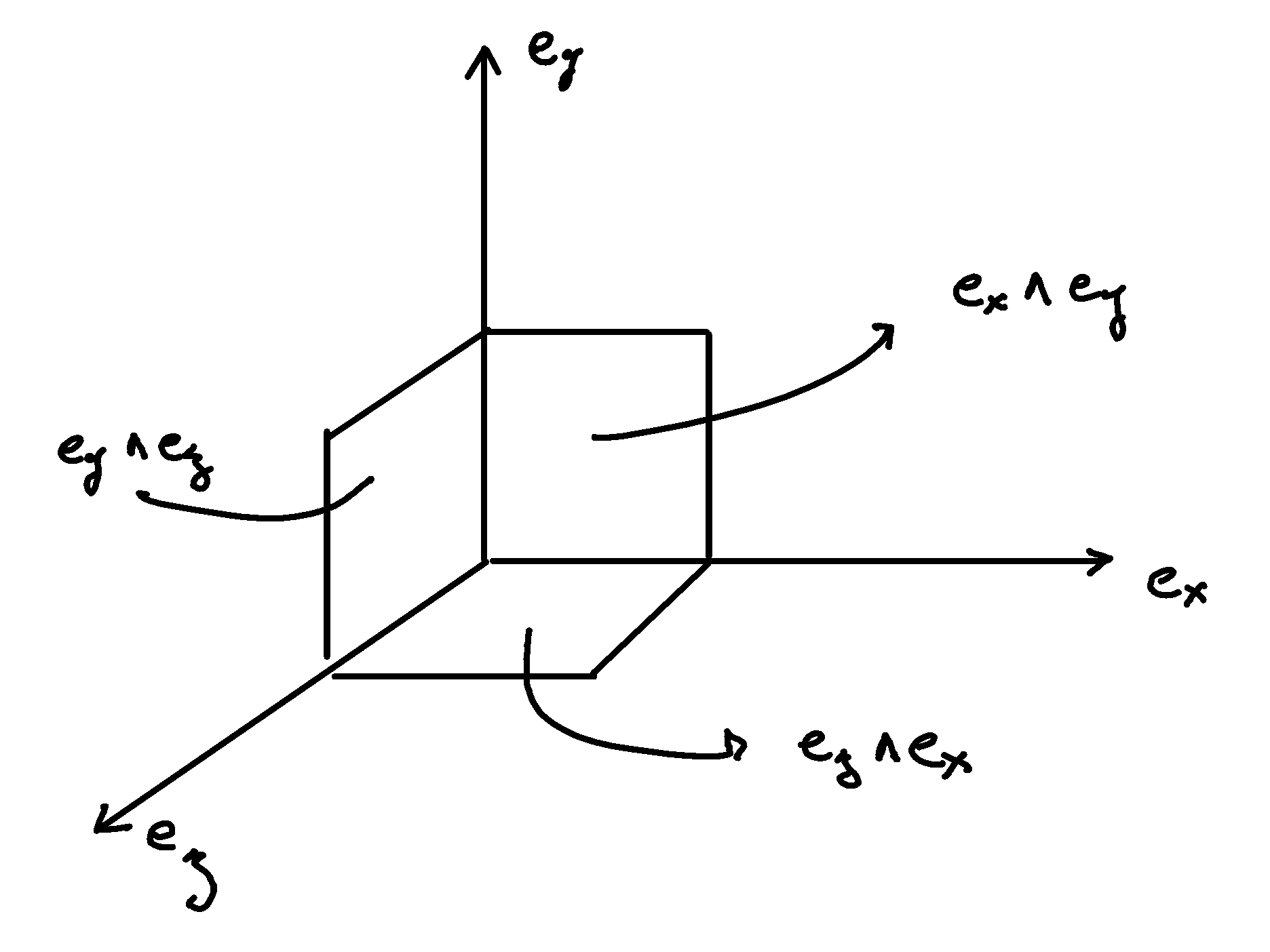

First consider a coordinate basis \(∂_x\), \(∂_y\) and \(∂_z\) in 3 dimensions corresponding to our intuitive understanding of space. Observe that we did not merely define three unit vectors, but also three unit surfaces, which we name using the wedge symbol \(∧\). The surface along the \(x\) and \(y\) axis is named \(∂_x ∧ ∂_y\), along the \(y\) and \(z\) axis \(∂_y ∧ ∂_z\), and along the \(z\) and \(x\) axis \(∂_z ∧ ∂_x\):

The naming of the surfaces is carefully chosen counterclock-wise. The reason is that we have not defined a mere surface from two vectors, but an oriented surface: The surface magnitude can be negative, depending on the chosen orientation. Here, we take the convention that surfaces oriented counterclockwise are positive. For example: \(∂_z ∧ ∂_x = - ∂_x ∧ ∂_z\).

Remark that we have not only decided on a naming convention, but created new mathematical objects built from two vectors and a new product denoted with the wedge symbol \(∧\). We call these objects bivectors, and the new product denoted with the wedge symbol \(∧\) exterior product. The fundamental property of these objects is that they are antisymmetric, and is already given by the discussion about the surface orientation:

Necessarily, \(∂_i ∧ ∂_i = 0\) since two copies of the same vectors cannot generate a surface. This can also be determined from the antisymmetric property above.

In 3 dimensions, we note that each basis bivector can be associated with a unique basis vector:

We note this relation with the star symbol \(⋆\):

This association defines a unique vector dual to every oriented surfaces called the Hodge dual. Hodge duality is noted with the star symbol \(⋆\), called the Hodge star operator. The relation holds in both direction:

The Hodge dual in three dimensions is the cross product. The cross product defines a vector perpendicular to the surface whose length is proportional to the amount of rotation:

This establishes the deep connection between the Hodge dual, rotations, surfaces, and the cross product.

Going one step futher, we observe that we did not merely define unit surfaces, but also unit volumes that we note \(∂_x ∧ ∂_y ∧ ∂_z\). We can associate the unit volume with numbers:

As well as:

Where \(\mathbf{1}\) is the unit number. In other words any number can be expressed as a linear combination of \(1\).

Pseudo-vectors and pseudo-scalars#

As a side quest which may be of particular interest to particle physicist, I discuss the naming pseudo-vector and pseudo-scalar. From the vector basis, we have constructed with the following objects:

scalars,

vectors,

bivectors corresponding to oriented surfaces, and

trivectors corresponding to oriented volumes.

Place these objects in front of a mirror as a Gedankenexperiment. Observe the image of these objects and notice that:

scalars are unchanged,

vectors are unchanged,

oriented surfaces defined from two vectors are flipped with a change of sign, and

oriented volumes defined as trivectors (i.e. from an oriented surface and a vector) are also flipped with a change of sign.

This is the reason for the name pseudo-vector. These objects look like and nearly behave like the vectors they are associated to through Hodge duality. However and contrary to vectors, the sign of the image of a positive oriented surface goes to negative when placed in front of a mirror. The image of the Hodge dual vector is flipped.

This is also the reason for the name pseudo-scalar. These objects look like and nearly behave like the scalars they are associated to through Hodge duality. However and contrary to scalars, the sign of the image of a positive oriented volume goes to negative when placed in front of a mirror. The image of the Hodge dual scalar is flipped.

Inner product of k–vectors#

The object of this section is to generalize the inner product from vectors to multivectors. This will be needed to generalize Hodge duality to any number of dimensions and metric signatures. Indeed, Minkowski space is characterized not only by 4 dimension, but also by the mixed metric signature \((+,-,-,-)\). Intuitively, we can guess that the inner product on multivectors should be influence by the metric signature. In turn, we can then also expect that the metric signature will play a role for Hodge duality in Minkowski space. We will see that the concept of the inner product is akin to measuring the size of shadows in one dimension, two dimensions, three dimensions, and k-dimensions in all generality.

Inner product of vectors#

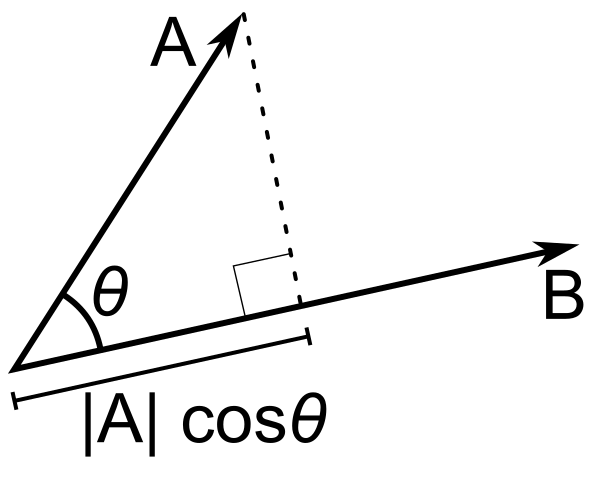

I expect you are very familiar with linear algebra, since you are interested in the more advanced topic of Hodge duality. I nonethelss recall what you may find obvious. The inner product of one vector onto another corresponds to the projection of one vector onto the other. In that sense, the inner product can be understood as a one-dimensional shadow:

Inner product on vectors.#

For the basis vectors in flat euclidean space, we obtain:

For the basis 4-vectors in flat Minkowski space, we obtain:

This is the starting point for a procedure which permits to meaningfully lift the inner product on vectors to the inner products on bivectors, trivectors, quadvectors, and in all generality to k–vectors.

Surfaces, volumes, hypervolumes, and the determinant of matrices#

The next step is to highlight the link between the inner product and the determinant of matrices. I recall the relationship between the determinant, surfaces, volumes, and hypervolumes.

Surfaces and the determinant of 2x2 matrices.#

The area of the surface \(S\) spanned by the parallelogram defined by a vector \(a ∂_x + b ∂_y\) and a vector \(c ∂_x + d ∂_y\) corresponds to the determinant of the \(2 \times 2\) matrice, where each column entries are the the components of the vectors.

This can equivalently be achieved by calculating the exterior product of these two vectors. The notation \(S^{♯♯}\) indicates that the surface is a bivector, and not a real number \(S\).

Calculation

Take the exterior product

Distribute

Remove zero terms and take the factors in front of expression

Reorganize and conclude

Calculation in free matrix representation

Using the free matrix representation from the Cartan-Hodge formalism helps organize calculations and yields the same result.

Take the exterior product

Distribute and remove zero terms

Reorganize and conclude

The same can be done to calculate the volume \(V\) of a parallelepiped defined by three vectors.

Volumes and the determinant of 3x3 matrices.#

\(r_1 = a ∂_x + b ∂_y + c ∂_z\)

\(r_2 = u ∂_x + v ∂_y + w ∂_z\)

\(r_3 = p ∂_x + q ∂_y + r ∂_z\)

The entries of each columns are the vector components:

The above result can be equivalently achieved by taking the exterior product of these three vectors:

Calculation

Wedge the three vectors defining the volume

Expand the first two vectors

Expand with the third vector

Reorder

Conclude

This procedure can be generalized to hypervolumes constructed from k–vectors/ The hypervolume is calculated with the determinant of a \(k \times k\) matrice, or equivalently by taking the exterior product of k k–vectors.

Inner product of vectors, bivectors, and trivectors in 3-dimensional Euclidean space#

In essence, the inner product can be understood as the concept of measuring shadows. The inner product between two vectors is the measure of the 1-dimensional shadow of one vector onto the other. Similarly, the inner product between bivectors is the measure of the surface shadow of one surface onto the other. This 2-dimensional surface can be calculated from the determinant of a \(2 ⨯ 2\) matrix. We then generalize to 3-dimensions by calculating the determinant of \(3 ⨯ 3\) matrices, corresponding to the volumes covered by 3-vectors. One step further, a k-dimensional shadow measure can then be calculated using \(k ⨯ k\) matrices, corresponding to hypervolumes of dimension k. This permits to find a meaningfull way to lift the inner product from vectors to bivectors, trivectors, and k–vectors. Lifting the inner product permits to generalize the the Hodge dual to any metric signature, and apply to Minkowski space with metric signature \((+,-,-,-)\). In 3-dimensional Euclidean space, the inner product of the basis vectors, denoted with either the dot symbol \(\cdot\) or the bracket symbol \(\braket{|}\) is given by:

Fully expanded, we have the following dot products for each basis vector combination:

A first hint that the inner product can be naturally generalized to surfaces, is that in 3 dimensions, we can associate a basis surface to each of the basis vectors through the Hodge dual, as argued at the beginning of this article. It may then feel natural, since \(∂_x\) is associated to \(∂_y ∧ ∂_z\), to expect that the inner product \(\braket{∂_x|∂_x}=1\) implies that \(\braket{∂_y ∧ ∂_z | ∂_y ∧ ∂_z}=1\).

Let us consider two vectors \(a^♯\) and \(b^♯\) in 3-dimensional Euclidean space, written in component form as:

\(a^♯ = p \, ∂_x + q \, ∂_y + r \, ∂_z\)

\(b^♯ = u \, ∂_x + v \, ∂_y + w \, ∂_z\)

Now consider the components of \(a^♯\) and \(b^♯\) along the unit vectors \(∂_x\) and \(∂_y\):

\(p \, ∂_x + q \, ∂_y\)

\(u \, ∂_x + v \, ∂_y\)

The measure of the amount of shadow of the surface determined by vectors \(a^♯\) and \(b^♯\) on the \(∂_x ∧ ∂_y\) plane is the inner product on bivectors. This lifts the inner product from vectors to bivectors through the determinant:

In the same manner we obtain:

With this quantities, we have measured the amount of shadow from the surface determined by vectors \(a^♯\) and \(b^♯\) onto the unit bivectors \(∂_y ∧ ∂_z\), \(∂_z ∧ ∂_x\), and \(∂_x ∧ ∂_y\), . We can modify the expressions slightly in order to relate the vector components to the inner product of vectors. Vector components can be obtained by doting the vectors with the basis vectors:

Put together in condensed form:

With this, we can determine the surface of any arbitrary bivector compared to the basis bivectors. In particular We can replace vectors \(a^♯\) and \(b^♯\) with any of the basis vectors \(∂_x\), \(∂_y\), or \(∂_z\). We now have a technique to determine the inner product of basis bivectors:

Which permits to obtain:

All other inner products are zero. For example:

In summary, we obtain:

Finally, we generalize lifting the inner product to trivectors. In 3-dimensional Euclidean space, we get for the unit trivector:

In table form, we have:

With this, we remark we have found a meaningfull and reasonable way to extend the inner product to k-forms in Minkowski space. This approach is meaningful, as the inner product of the basis vectors inherently incorporates the metric signature.

Inner product of k–vectors in Minkowski space#

The inner product in Minkowski space of the basis vectors is:

Fully expanded in table form we have:

We use our procedure for lifting the inner product to bivectors:

In table form, we obtain:

Calculations

In this dropdown section, I extend the inner product to all non-zero bivector combinations and provide an example of a zero combination.

Basis bivectors involving the temporal dimension

Basis bivectors involving the spatial dimensions

Basis vectors resulting in zero inner products

All other inner products are zero. I demonstrate one example calculation, assuming the remaining cases will be clear to the reader.

As well as for trivectors:

Calculations

In Minkowski space, all quadvectors are proportional to \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\):

Formal and natural definition#

In 3-dimensional Euclidean space, the Hodge dual of a k–vector \(β\) is uniquely defined by the property that for any other k–vector \(α\), the following property holds:

In essence, this asks: Given a k–vector, which m-vector fills the remaining space? Where the inner product \(\braket{α | β}\) ensures that \(⋆ β\) is unique. This question can be viewed as a natural definition and be used for practical calculations. For 4-dimensional Minkowski space, the definition is:

For instance, when seeking the Hodge dual \(⋆(∂_t ∧ ∂_x)\), we know that it must be \(∂_y ∧ ∂_z\) to complete the space, with the sign determined by the inner product \(\braket{∂_t ∧ ∂_x | ∂_t ∧ ∂_x} = -1\). Therefore, in this example, we obtain:

Duality in Minkowski space#

With the formal definition of the Hodge dual and the calculated inner products on k–vectors, we systematically compute the Hodge dual in Minkowski using the mostly negative Minkowski metric signature \((+,-,-,-)\). I first give the Hodge dual for k–vectors, as developed throughout this page, and then extend it to k–forms.

For a straightforward and alternative calculation of the Hodge duals using the interior product \(⌟\), you can have a look at the page Hodge dual computations.

k–vectors#

Calculations

Determine the Hodge duals up to the sign

To obtain the volume element \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\), the Hodge duals must be proportional to:

Check the sign

Since the above was mentally determined, we check by wedging the left part to the right part of the equations above in order to make sure the sign is positive when reordered into the volume element \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\).

Use the definition of the Hodge dual

Reorder

Apply the values of the inner products

Conclude

If you feel more comfortable with a more mechanical approach I redirect you to the video by Michael Penn: Differential Forms | The Minkowski metric and the Hodge operator.

Calculations

To obtain the volume element \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\), the Hodge duals must be proportional to:

For example, taking the second entry as an example \(⋆ (∂_t ∧ ∂_y) \propto ∂_z ∧ ∂_x\), we have:

Taken all together and with the inner product, we have:

Which simplifies to:

Calculations

To obtain the volume element \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\), the Hodge duals must be proportional to:

Indeed, we check this for all entries:

Taken all together and with the inner product:

Calculations

To obtain the volume element \(∂_t ∧ ∂_x ∧ ∂_y ∧ ∂_z\), the Hodge duals must be proportional to:

Taken all together and with the inner product:

k–forms#

We now have established the Hodge dual of k–vectors. This procedure can be also applied to k–forms. As with k–vectors, the Hodge dual on k–forms is defined by the property that for all k–forms \(α\) and \(β\), the following equation holds:

Here, \(\braket{α|β} = α · β\) is the inner product on k–forms, lifted from the inner product on 1–forms as previously investigated for k–vectors:

Consequently we obtain: